I’ve been learning about AI and computer vision with my Jetson Nano. I’m hoping to have it use my cameras to improve my home automation. Ultimately, I want to install external security cameras which will detect and scare off the deer when they approach my fruit trees. However, to start with I decided I would automate a ‘very simple’ problem.

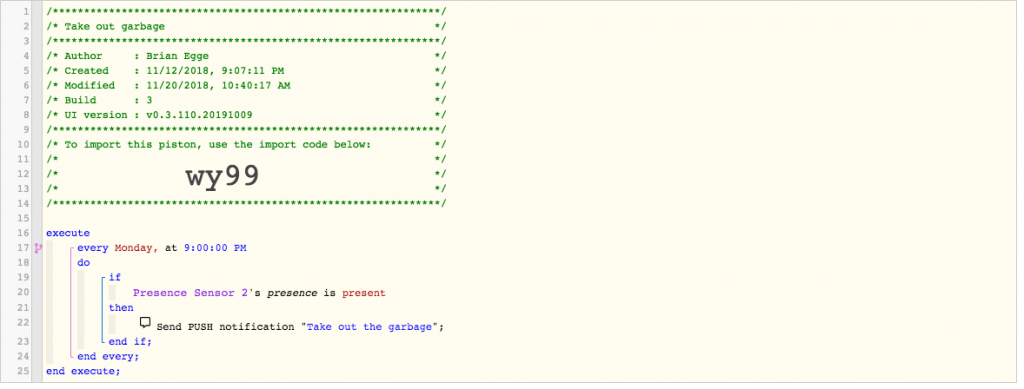

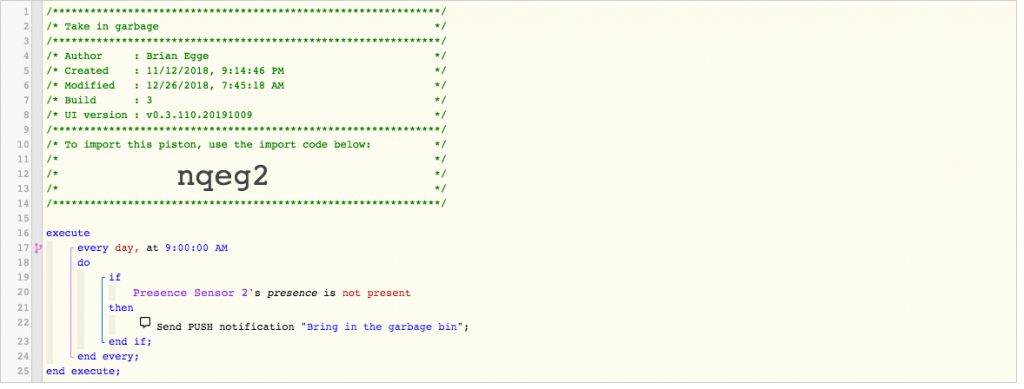

Take out the garbage reminder

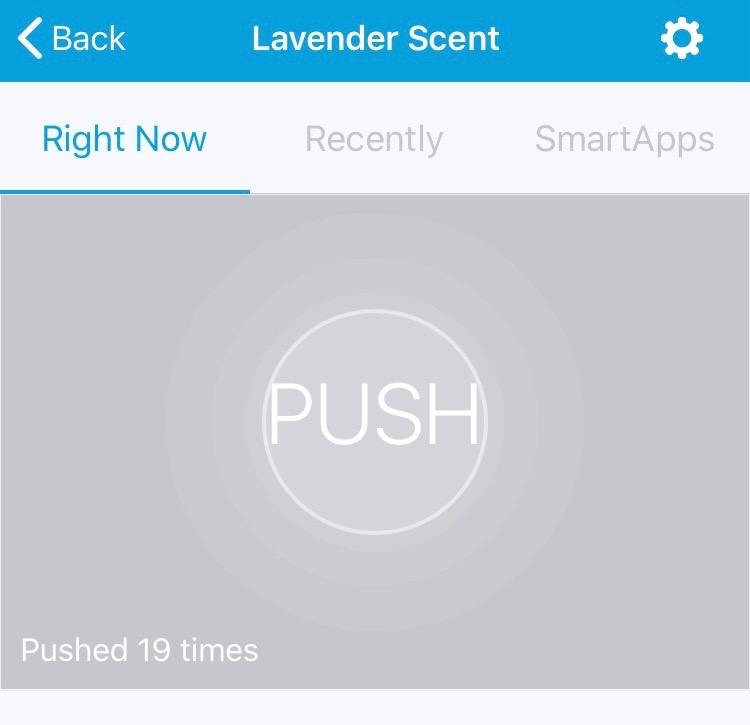

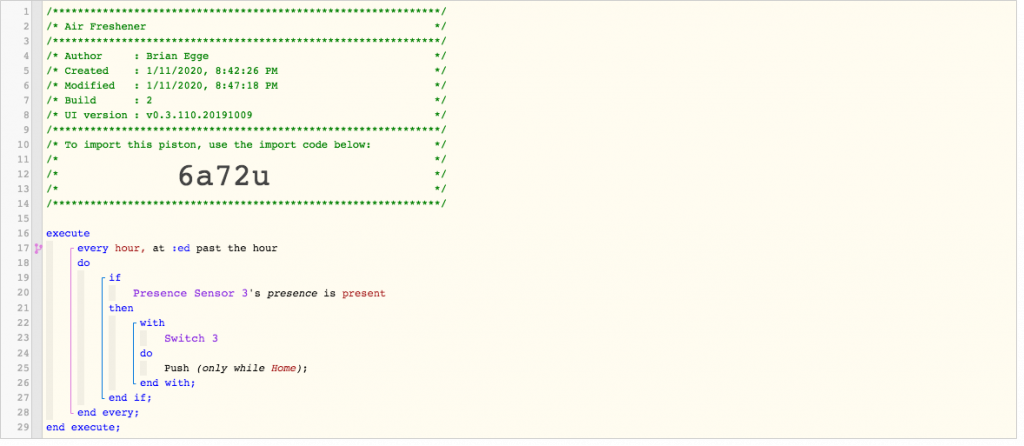

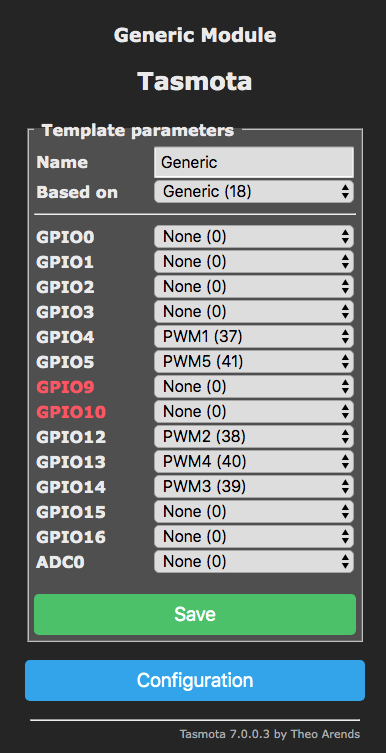

I have for some time had a reminder to bring out the garbage, to bring it in, and a thank you message once someone brings it in. This is done with a few WebCore pistons:

In order to decide if the garbage is in the garage or not I’ve attached a trackr tile which is detected by my Raspberry Pi 3. Unfortunately, if the battery dies or gets too cold it’s stops working. I could attach a larger battery to the tile, but it needs to be attached to my bin, so I don’t want something too big. So decided it should be trivial to have a camera learn if the garbage bin is present and then update the presence in SmartThings. It took me but a few minutes to train an object classification on https://teachablemachine.withgoogle.com/, so I thought this was doable.

First I mounted a USB camera to the ceiling in the garage and attached to the Raspberry Pi. I then spent a few days learning how to access the camera, and my options to stream from it, etc.. ultimately, I decided to use fswebcam to grab the images.

fswebcam --quiet --resolution 1920 --no-banner --no-timestamp --skip 20 $image

Once I had a collection of images, I installed labelImg on my nano. This is because for this project I didn’t just want to do image classification but object detection. In hindsight, it would have been much simpler to crop my image to the general area where the bins reside and then train an object detector.

After assembling about 20 images I then copied around scripts to create all the supporting files for TensorFlow. I went from text to csv to xml to protocol buffers. In the end, I had something ready to train. I attempted to train on the Nano, but soon came to the realization it was never going to work. My other PCs don’t have a modern GPU for running AI tasks, so my hope was to get it to work the with Nano. I learned about renting servers but that was going to add costs and complications. I then learned about Google Colab, which (for now), gives you free runtimes with a good GPU or TPU. Once running you’ll find out what kit your runtime has. I’ve gotten different hardware on different runs. My last run used the Tesla P100-PCIE-16GB. That’s a $5,000 card which not even NVidia is going to let me try out for free.

It look me a long time to get the pieces together in one notebook to be able to train my model. Certainly not the drag and drop of the Teachable Machine.

One thing which helped a lot was tuning the augmentation items. I know the camera is fixed so I don’t need to have it flip or crop the image. Since the garage has windows the lighting can change a lot depending on the time of day. I didn’t setup TensorBoard, but it quickly goes from 0.5% loss after a few steps. I have a small sample and a fixed camera, which helps.

data_augmentation_options {

random_adjust_brightness {

}

}

data_augmentation_options {

random_adjust_saturation {

}

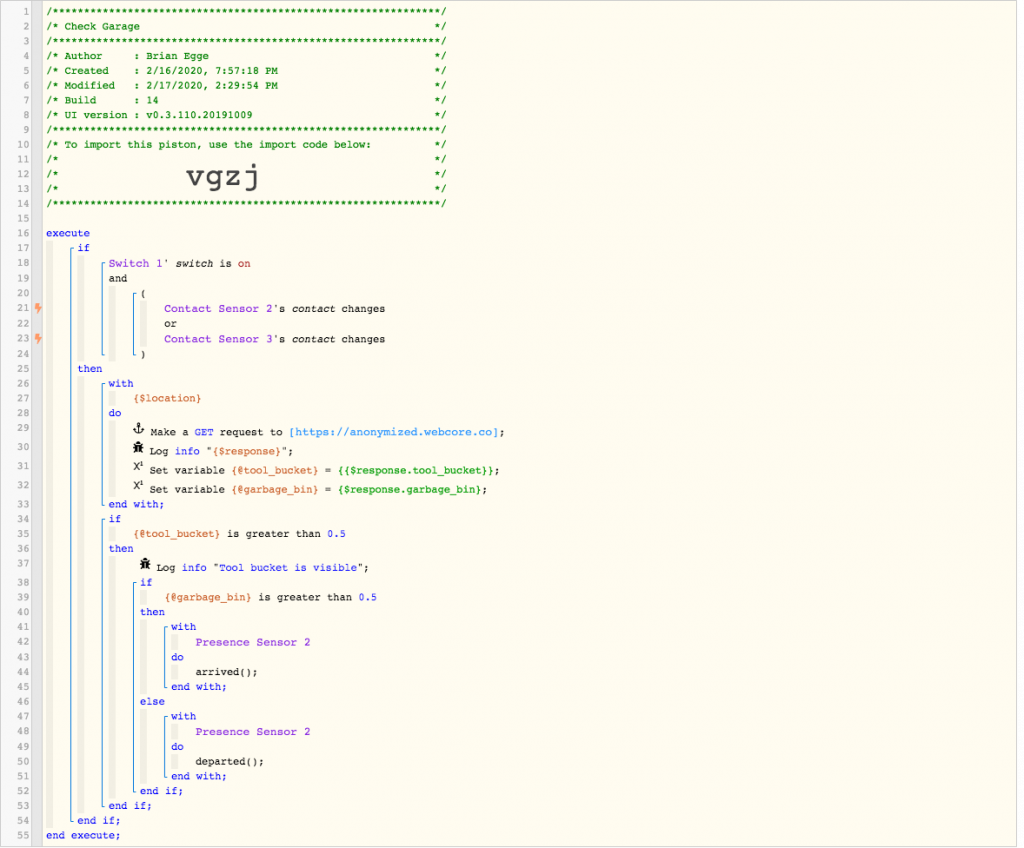

}Once running in the notebook I then spend another few days getting the model to run on my Jetson Nano. NVidia did not make this easy. Ultimately, I downgraded to TensorFlow 1.14.0 and patched one of the model files. Eventually I got it running, then I just needed to get it to work with SmartThings. Since the bins are really only going to move when the garage doors open, I don’t need to do this detection in real time. I want WebCore to query the garage when it detects the doors open or close. I have it do this by querying a web service on my Raspberry Pi:

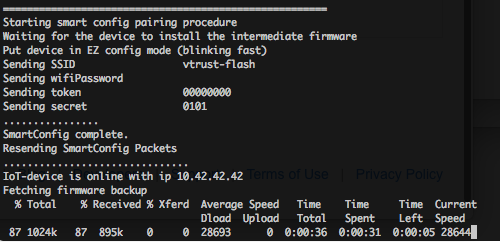

On the Raspberry Pi, I want it to snap an image, and send it to the Jetson for analysis. I wrote the world’s dumbest web service, installing it with inetd:

#!/bin/sh

0<&-

image=$(mktemp /var/images/garage.XXXXXXX.jpg)

/bin/echo -en "HTTP/1.0 200 OK\r\n"

fswebcam --quiet --resolution 1920 --no-banner --no-timestamp --skip 20 $image

/bin/echo -en "Content-Type: application/json\r\n"

curl --silent -H "Tranfer-Encoding: chunked" -F "file=@$image" http://egge-nano.local:5000/detect > $image.txt

/bin/echo -en "Content-Length: $(wc -c < ${image}.txt)\r\n"

/bin/echo -en "Server: $(hostname) $0\r\n"

/bin/echo -en "Date: $(TZ=GMT date '+%a, %d %b %Y %T %Z')\r\n"

/bin/echo -en "\r\n"

cat $image.txt

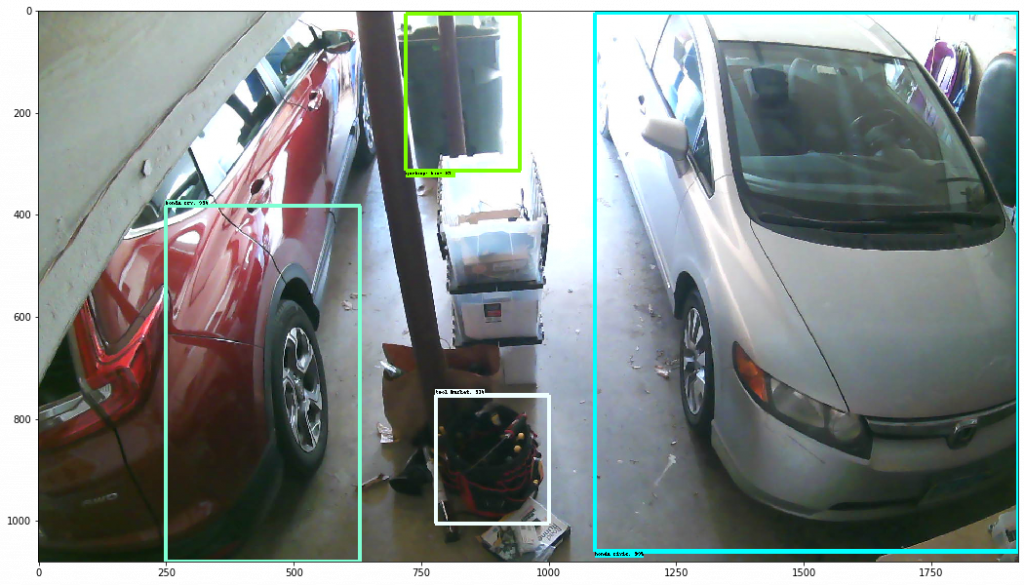

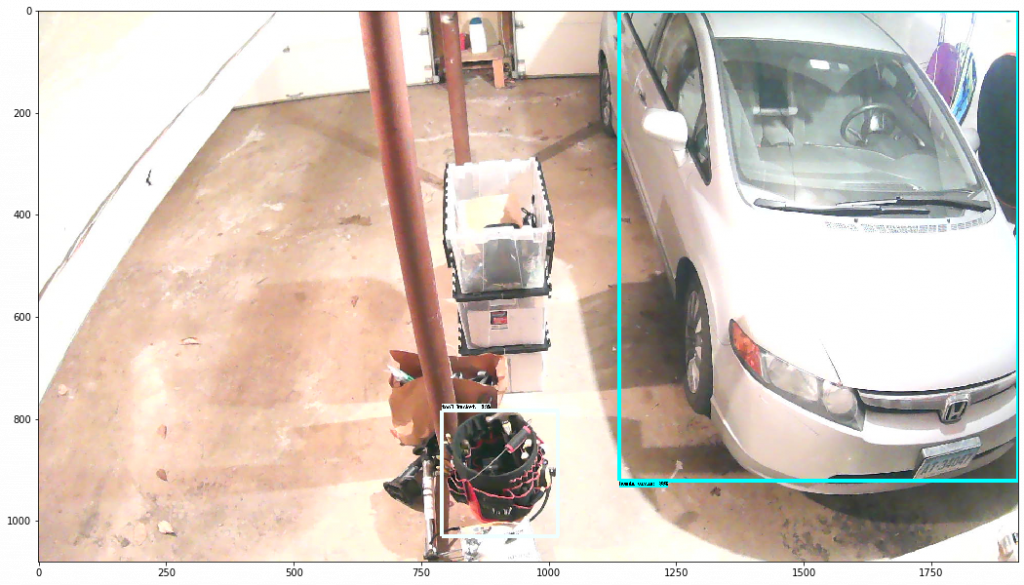

chmod a+r $imageI keep a copy of the image and the response in case I need to retain the model. The image is sent over the jetson, where I have a Flask app running. I wasted a ton of time trying to get Flask to work, basically, if you use debug mode, then OpenCV doesn’t work because of different context loading. I could not seems to get Flask to keep the GPU opened for the life of the request, so on each request I open the GPU and load the model. This is quite inefficient as you may imagine. I also experimented with having the Raspberry Pi stream the video all the time over rtsp and then having ffmpeg save an image when it needs it. The problem seemed to be ffmpeg wasn’t always reliable. If I ran it for a single snapshot, it would not always capture an image. If I ran it continually, after some time it would exit. I have it trained to recognize four objects. If use my tool bucket as a source of truth. If it sees that, then I can assume it’s working, otherwise, I don’t have reliable enough information.

The scripts which I adapted are here: https://github.com/brianegge/garbage_bin

I’d like to use a ESP Cam to detect if a I have a package on my front steps. Maybe this will be my next project before I work on detecting deer.